Web Scraping Dynamic Websites with Python: A Step-by-Step Guide

TL;DR

To scrape dynamic websites, use Python libraries Selenium for browser interaction and Beautiful Soup for parsing HTML. This approach allows for effective data extraction from pages with content loaded asynchronously.

Understanding and employing these tools can navigate the complexities of dynamic web scraping.

Enhance your web scraping efficiency and accuracy by automating with Bardeen's advanced Scraper integration.

Web scraping dynamic websites can be a daunting task, as the content is often loaded through JavaScript and AJAX calls, making it challenging to extract data using traditional scraping methods. In this step-by-step Python tutorial, we'll guide you through the process of scraping dynamic websites effectively in 2024. We'll cover the essential tools, libraries, and best practices you need to tackle modern web scraping challenges and retrieve valuable data from dynamic web pages.

Understanding Dynamic Websites and Their Challenges

Dynamic websites generate content on the fly based on user interactions, database queries, or real-time data. Unlike static websites that serve pre-built HTML pages, dynamic sites rely on server-side processing and client-side JavaScript to create personalized experiences.

However, this dynamic nature poses challenges for web scraping:

- Content loaded through AJAX calls may not be immediately available in the initial HTML response

- JavaScript rendering can modify the DOM, making it difficult to locate desired elements

- User interactions like clicks, scrolls, or form submissions may be required to access certain data

Traditional scraping techniques that rely on parsing static HTML often fall short when dealing with dynamic websites. Scrapers need to be equipped with the ability to execute JavaScript, wait for asynchronous data loading, and simulate user actions to extract information effectively.

Setting Up Your Python Environment for Dynamic Scraping

To get started with dynamic web scraping in Python, you need to set up your development environment with the necessary tools and libraries. Here's a step-by-step guide to extract data:

- Install Python: Download and install the latest version of Python from the official website (https://www.python.org). Make sure to add Python to your system's PATH during the installation process.

- Set up a virtual environment (optional but recommended): Create a virtual environment to keep your project's dependencies isolated. Open a terminal and run:python -m venv myenv source myenv/bin/activate

- Install required libraries: Use pip, the Python package manager, to install the essential libraries for dynamic web scraping:pip install requests beautifulsoup4 selenium

- requests: A library for making HTTP requests to web pages

- beautifulsoup4: A library for parsing HTML and XML documents

- selenium: A library for automating web browsers and interacting with dynamic web pages

- Install a web driver: Selenium requires a web driver to control the browser. Popular choices include ChromeDriver for Google Chrome and GeckoDriver for Mozilla Firefox. Download the appropriate driver for your browser and operating system, and ensure it's accessible from your system's PATH.

.png)

With these steps completed, you have a Python environment ready for dynamic web scraping without code. You can now create a new Python file and start writing your scraping script, importing the installed libraries as needed.

Save time and automate your scraping tasks. Use Bardeen to automate web scraping with ease.

.png)

Essential Tools and Libraries for Dynamic Web Scraping

When it comes to dynamic web scraping in Python, several powerful libraries stand out:

- Selenium: Selenium is a versatile tool that automates web browsers, allowing you to interact with dynamic web pages as if you were a human user. It's ideal for scraping websites that heavily rely on JavaScript to load content dynamically. Selenium supports various programming languages, including Python, and works with popular browsers like Chrome, Firefox, and Safari.

- Requests-HTML: Requests-HTML is a library that combines the simplicity of the Requests library with the power of PyQuery and Selenium. It provides a convenient way to make HTTP requests, render JavaScript, and parse the resulting HTML. Requests-HTML is particularly useful for scraping websites into Google Sheets that use AJAX to load data dynamically without requiring a full page reload.

- Scrapy: Scrapy is a comprehensive web scraping framework that offers a wide range of features for extracting data from websites. While it's primarily designed for scraping static websites, Scrapy can be extended with additional libraries like Selenium or Splash to handle dynamic content. Scrapy provides a structured approach to web scraping tasks, making it suitable for large-scale projects and complex scraping tasks.

The choice of tool depends on the nature of the dynamic content you need to scrape. If the website heavily relies on user interactions and complex JavaScript rendering, Selenium is the go-to choice. It allows you to simulate user actions like clicking buttons, filling forms, and scrolling, making it possible to access dynamically loaded content.

On the other hand, if the website uses AJAX to load data dynamically without requiring user interactions, Requests-HTML can be a more lightweight and efficient solution. It allows you to make HTTP requests, render JavaScript, and extract the desired data using familiar HTML parsing techniques.

For large-scale scraping projects or when you need a more structured and scalable approach, Scrapy is a powerful framework to consider. It provides built-in support for handling cookies, authentication, and concurrent requests, making it suitable for scraping websites with complex structures and large amounts of data.

Practical Examples: Scraping a Dynamic Website

Let's walk through a step-by-step example of using Selenium to interact with a dynamic website and retrieve dynamically loaded data. We'll use the Angular website as our target.

- Install Selenium: First, make sure you have Selenium installed. You can install it using pip:

pip install selenium - Set up the WebDriver: Selenium requires a WebDriver to interface with the chosen browser. For example, if you're using Chrome, you need to install ChromeDriver. Make sure the WebDriver is in your system's PATH.

- Import necessary modules: In your Python script, import the required Selenium modules:

.png)

from selenium import webdriver from selenium.webdriver.common.by import By from selenium.webdriver.chrome.service import Service as ChromeService from webdriver_manager.chrome import ChromeDriverManager

- Create a new instance of the WebDriver:

driver = webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()))

- Navigate to the target website:

url = 'https://angular.io/' driver.get(url)

- Interact with the page: You can now use Selenium's methods to interact with the page, such as clicking buttons, filling out forms, or scrolling.

- Locate and extract data: Once the dynamic content has loaded, you can locate elements using various methods like

find_element()orfind_elements(). For example, to find all the titles within the elements with the class "text-container":

elements = driver.find_elements(By.CLASS_NAME, 'text-container') for title in elements:

heading = title.find_element(By.TAG_NAME, 'h2').text print(heading)

- Close the WebDriver: After scraping the desired data, close the WebDriver:

driver.quit()

Save time and automate your scraping tasks. Use Bardeen to automate web scraping with ease.

By following these steps, you can successfully scrape dynamic websites using Selenium in Python. Remember to adapt the code based on the specific website you're targeting and the data you want to extract.

Best Practices and Handling Anti-Scraping Techniques

When scraping dynamic websites, it's essential to be aware of common anti-scraping defenses and employ best practices to handle them effectively. Here are some strategies to overcome scraping challenges:

- IP rotation: Websites often block or limit requests from the same IP address. Use a pool of proxy servers to rotate IP addresses and avoid detection.

- Respect robots.txt: Check the website's robots.txt file to understand their crawling policies and respect the specified rules to maintain ethical scraping practices.

- Introduce random delays: Avoid sending requests at a fixed interval, as it can be easily detected. Introduce random delays between requests to mimic human behavior.

- Use user agent rotation: Rotate user agent strings to prevent websites from identifying and blocking your scraper based on a specific user agent.

- Handle CAPTCHAs: Some websites employ CAPTCHAs to prevent automated scraping. Consider using CAPTCHA solving services or libraries to bypass them when necessary.

- Avoid honeypot traps: Be cautious of hidden links or elements designed to trap scrapers. Analyze the page structure and avoid following links that are invisible to users.

- Monitor website changes: Websites may update their structure or anti-scraping techniques over time. Regularly monitor your scraper's performance and adapt to any changes.

In addition to these technical considerations, it's crucial to be mindful of the legal and ethical aspects of web scraping. Always review the website's terms of service and respect their data usage policies. Scrape responsibly and avoid overloading the website's servers with excessive requests.

By implementing these best practices and handling anti-scraping techniques effectively, you can ensure a more reliable and sustainable web scraping process while maintaining respect for the websites you scrape.

Introduction

In today's digital landscape, web scraping has become an essential tool for businesses, researchers, and developers to extract valuable data from websites. However, the rise of dynamic websites has introduced new challenges to the scraping process. Unlike static websites, dynamic websites load content asynchronously using JavaScript and AJAX calls, making it difficult for traditional scraping techniques to capture the desired data.

This comprehensive tutorial aims to equip you with the knowledge and skills necessary to tackle the challenges of scraping dynamic websites using Python. We will explore the fundamental differences between static and dynamic websites, discuss the common obstacles encountered, and provide step-by-step guidance on setting up your Python environment for dynamic scraping.

Throughout this tutorial, we will introduce essential tools and libraries such as Selenium, BeautifulSoup, and Requests-HTML, which will enable you to interact with dynamic websites effectively. You'll learn how to navigate through pages, execute JavaScript, and extract data from AJAX-loaded content.

Furthermore, we will delve into practical examples, demonstrating how to apply these techniques to real-world scenarios. You'll gain hands-on experience in scraping dynamic websites and importing into Airtable while overcoming common anti-scraping defenses like CAPTCHAs, IP blocking, and rate limits.

By the end of this tutorial, you will have a solid understanding of the best practices and ethical considerations involved in web scraping. You'll be well-prepared to tackle modern web scraping challenges and extract valuable data from dynamic websites into Google Sheets efficiently and responsibly.

Let's dive in and uncover the power of dynamic web scraping with Python!

Save time and automate your scraping tasks. Use Bardeen to automate web scraping with ease.

.png)

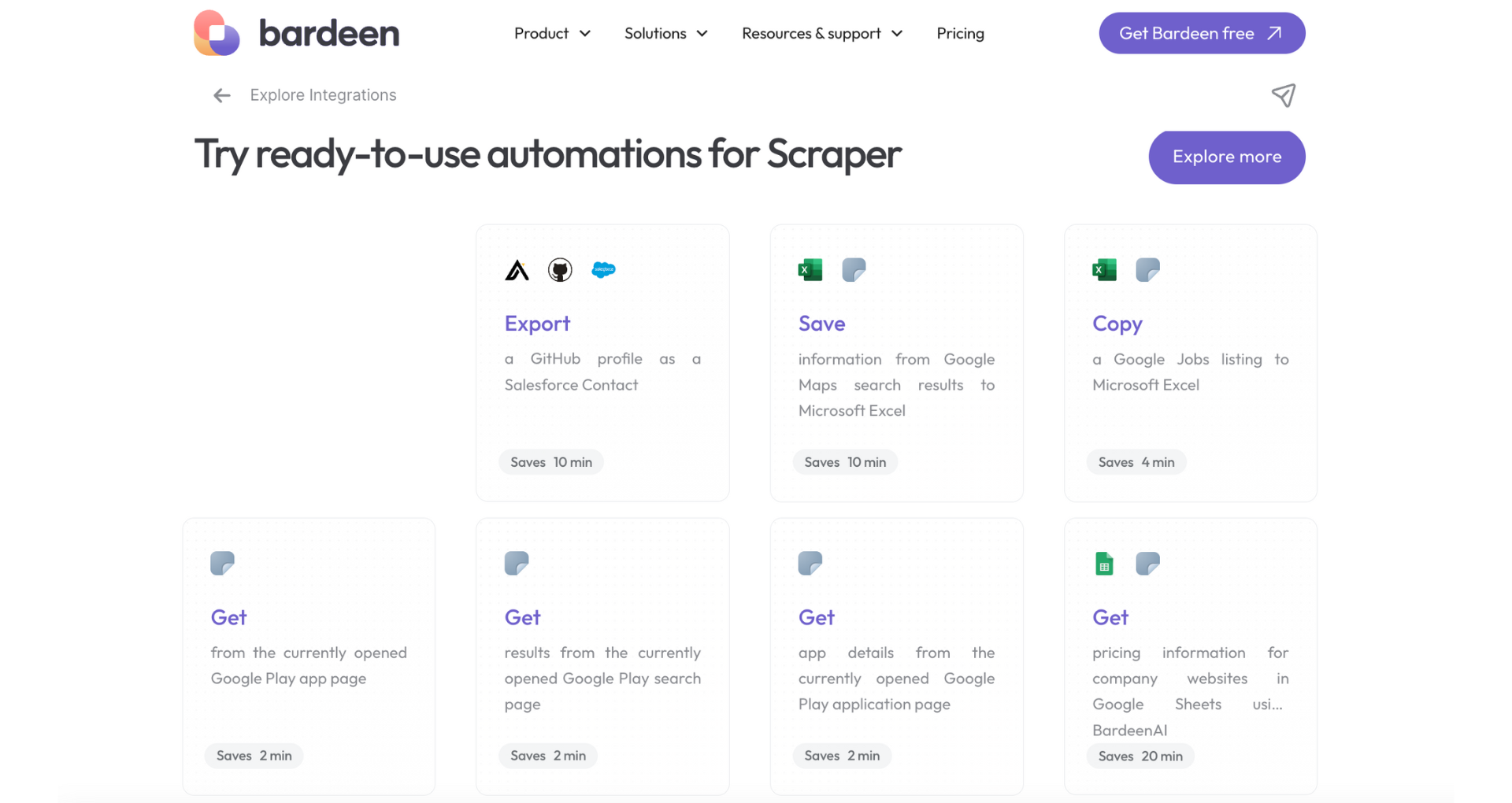

Automate Your Web Scraping with Bardeen's Playbooks

Web scraping dynamic websites can be a complex task due to the asynchronous loading of content. While the manual methods involving Python, Selenium, and Beautiful Soup provide a solid foundation, automating these processes can significantly enhance efficiency and accuracy. Bardeen, with its advanced Scraper integration, offers a powerful suite of playbooks to automate web scraping tasks, eliminating the need for extensive coding and manual intervention.

Here are some examples of how Bardeen can automate the process of web scraping dynamic websites:

- Get web page content of websites: This playbook automates the extraction of website content from a list of URLs in Google Sheets, updating the spreadsheet with the website's content. It's particularly useful for content aggregation and SEO analysis.

- Extract information from websites in Google Sheets using BardeenAI: Leverage Bardeen's web agent to automatically scan and extract any information from websites, directly into Google Sheets. Ideal for market research and competitive analysis.

- Send a screenshot of a web page to Slack periodically: This playbook periodically sends screenshots of specified websites to Slack. It's perfect for monitoring changes on competitor websites or sharing information with team members.

By automating web scraping tasks with Bardeen, you can save time, increase productivity, and ensure that your data collection is as accurate and up-to-date as possible. Start automating today by downloading the Bardeen app at Bardeen.ai/download.

Learn how to find or recover an iCloud email using a phone number through Apple ID recovery, device checks, and email searches.

Learn how to find someone's email on TikTok through their bio, social media, Google, and email finder tools. A comprehensive guide for efficient outreach.

Learn how to find a YouTube channel's email for business or collaborations through direct checks, email finder tools, and alternative strategies.

Learn how to find emails on Instagram through direct profile checks or tools like Swordfish AI. Discover methods for efficient contact discovery.

Learn why you can't find Reddit users by email due to privacy policies and discover 3 indirect methods to connect with them.

Learn how to find someone's email address for free using reverse email lookup, email lookup tools, and social media searches. A comprehensive guide.

Your proactive teammate — doing the busywork to save you time

.svg)

Integrate your apps and websites

Use data and events in one app to automate another. Bardeen supports an increasing library of powerful integrations.

.svg)

Perform tasks & actions

Bardeen completes tasks in apps and websites you use for work, so you don't have to - filling forms, sending messages, or even crafting detailed reports.

.svg)

Combine it all to create workflows

Workflows are a series of actions triggered by you or a change in a connected app. They automate repetitive tasks you normally perform manually - saving you time.

Don't just connect your apps, automate them.

200,000+ users and counting use Bardeen to eliminate repetitive tasks